GPU Boost 2.0

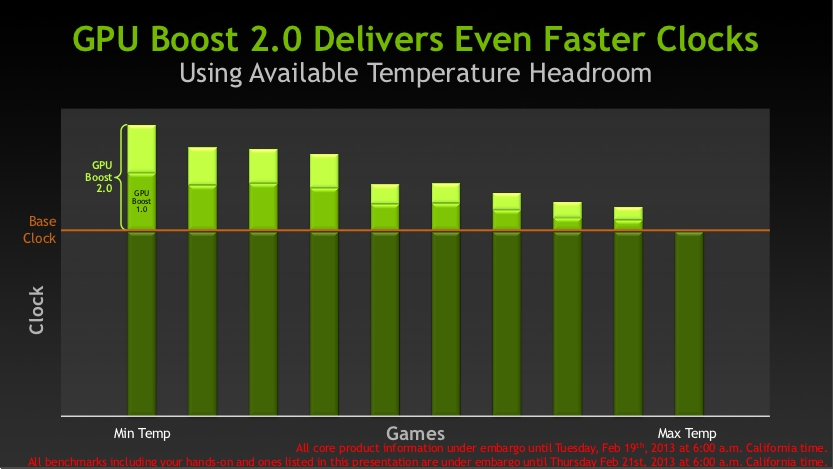

NVIDIA updated its GPU Boost technology with the GTX Titan. First introduced with the GeForce GTX 680 (detailed here, read it if you are not familiar with GPU Boost), GPU Boost replaces orthodox methods of clock speed control with speed-ranges between a nominal clock speed and a boost frequency. If the GPU senses an app could do with more performance, power-draw permitting, it automatically overclocks the graphics card. With GPU Boost 2.0, temperature is also taken into account to give the GPU dynamic overclocking headroom.Join the GeForce community. Browse categories, post your questions, or just chat with other members.

- DLSS 2.0 provides customizable image quality modes: Quality, Balanced, Performance- that control the game's internal rendering resolution. The highest setting mode-Performance-can upgrade 1080p images to 4k quality images. This means that it can upscale up to 4 times from the initial resolution. What titles are already available with DLSS 2.0?

- GPU Boost 2.0 use you. i5 3570 Palit GTX 680 jetsream Gigabyte Z77x-ud3h Corsair Vengeance 2x4 1600mhz WD Caviar Black 1TB Corsair TX 650m Coolemaster Hyper212 X NZXT Pantom 410 Asus VS239 Logitech G400s.

The temperature-based boost limits could help enthusiasts who use extreme cooling methods, such as liquid nitrogen, by reducing the control of power-based boost, which would allow them to achieve higher clock speeds as long as they keep the GPU within a temperature limit. Also introduced is over-voltage: it allows you to manually adjust the GPU core voltage. On-the-fly adjustments are also possible, to stabilize your overclock offsets.

The following graph shows how changes in GPU temperature drive the selected clock. We tested this with a static scene that renders the same scene each frame, which results in a constant GPU and memory load that would otherwise not be possible.

GPU clock is plotted on the vertical axis using the blue MHz scale on the left. Temperature is plotted on the vertical axis using the red °C scale on the right. Time is run on the horizontal axis.

As you can see, clock behavior is fundamentally different to how Boost 1.0 behaved. The card immediately goes to its maximum boost clock (993 MHz) and stays there as long as temperature allows. Once the card reaches the temperature target of 80°C, Boost 2.0 will quickly dial down frequencies to slow down the temperature increase after which there is a brief period where Boost 2.0 will try to increase clocks again in hopes of a less demanding game scene, which could allow for higher clocks again. Once that proves futile (we used a static scene), clocks are dropped down to the base clock levels of 836 MHz to keep temperature at around 80°C. As the temperature rises, clocks stay at base clock. Only once the GPU reaches 95°C do clocks go down to 418 MHz to avoid damaging the card. Once the card reaches 100°C, it is shut off to prevent any damage (we had to block the fan intake for the card to actually run that hot).

Voltage increase

With Titan, NVIDIA introduces the option of voltage control called 'overvoltaging'. This lets enthusiasts unlock extra voltage in software to facilitate additional overclocking.Using EVGA Precision, we increased the GPU voltage by the maximum level available (+0.038 V up to 1.20 V). We did not increase clock speeds, the power target, temperature target, or any other setting.

In all the following graphs, the blue line shows the performance improvement (or reduction) of the GTX Titan in comparison to its baseline performance at 80°C (black line). We used our test suite at 1920x1200 for all these tests. The dotted green line shows the average of the blue line.

As you can see from the benchmark results, we enabled a very small performance gain just by making the new increased voltages available to the boost clock algorithm. Normally, overvoltage is used to stabilize manual overclocking, but it looks like NVIDIA's boost 2.0 is smart enough to exploit that potential on its own.

Temperature Target

Using software tools provided by the board partners, users can adjust the GPU temperature target to their liking. If you want the card to boost to higher clocks, for example, you can adjust the temperature target up (for example, from the default of 80°C to 85°C). The GPU will then boost to higher clock speeds until it reaches the new temperature target.With GPU Boost 2.0 being temperature-dependent, NVIDIA suggests that adding a waterblock onto the Titan could result in additional performance benefits because the card can boost higher for longer, since it does not have to worry about GPU temperature getting too high.

We set the fan speed of our card to maximum (which is limited to 85% by the vBIOS), adjusted the temperature target to 94°C (highest) and ran our test suite. The results show that real-life performance increases by an average of 2.5%. This is representative of what to expect of the card without any additional overclocking and with watercooling. In our test, the GPU temperature never exceeded 65°C, so any temperature limitations were effectively removed and the card could boost as high as the power limits would allow. It also shows that the card already comes with very decent fan settings out of the box, since increasing fan speed just to gain a little bit of performance is certainly not worth it.

Ac dc music download free. Authors: Louis Bavoil and Iain Cantlay

With all modern graphics APIs (D3D11, D3D12, GL4 and Vulkan), it is possible for an application to query the elapsed GPU time for any given range of render calls by using timestamp queries. Most game engines today are using this mechanism to measure the GPU time spent on a whole frame and per pass. This blog post includes full source code for a simple D3D12 application (SetStablePowerState.exe) that can be run to disable and restore GPU Boost at any time, for all graphics applications running on the system. Disabling GPU Boost helps getting more deterministic GPU times from timestamp queries. And because the clocks are changed at the system level, you can run SetStablePowerState.exe even if your game is using a different graphics API than D3D12. The only requirement is that you use Windows 10 and have the Windows 10 SDK installed.

Nvidia Gpu Boost 2.0 How To Use

Motivation

On some occasions, we have found ourselves confused by the fact that the measured GPU time for a given pass we were working on would change over time, even if we did not make any change to that pass. The GPU times would be stable within a run, but would sometimes vary slightly from run to run. Later on, we learned that this can happen as a side effect of the GPU having a variable Core Clock frequency, depending on the current GPU temperature and possibly other factors such as power consumption. This can happen with all GPUs that have variable frequencies, and can happen with all NVIDIA GPUs that include a version of GPU Boost, more specifically all GPUs based on the Kepler, Maxwell and Pascal architectures, and beyond.

SetStablePowerState.exe

All NVIDIA GPUs that have GPU Boost have a well-defined Base Clock frequency associated with them. That is the value of the GPU Core Clock frequency that the GPU should be able to sustain while staying within the reference power usage and temperature targets. For the record, for each GeForce GPU, the Base Clock is specified in the associated Specification page on GeForce.com.

Using D3D12, there is an easy way for an application to request the NVIDIA driver to lock the GPU Core Clock frequency to its Base Clock value: by using the ID3D12Device::SetStablePowerState method. When calling SetStablePowerState(TRUE), a system-wide change of GPU power-management policy happens for the NVIDIA GPU associated with the current D3D12 device, and the current GPU Core Clock gets locked to the reference Base Clock recorded in the VBIOS for that GPU, unless thermal events happen. If the GPU detects that it's overheating, it will then down-clock itself even if SetStablePowerState(TRUE) was called. But in practice, that should never happen if the GPU is in a properly cooled case and its fan is working properly. The result is that the GPU Core Clock frequency is then stable at Base Clock once any D3D12 application calls SetStablePowerState(TRUE) in the system. In other words, GPU Boost gets disabled. And our driver takes care of restoring the previous GPU power-management state when the locking D3D12 device gets released.

Knowing all that, we have written a simple standalone D3D12 application (SetStablePowerState.exe) that can lock and unlock the current GPU Core Clock frequency for any NVIDIA GPU with GPU Boost. The GPU Core Clock frequency gets instantly locked when launching this app, so it can be launched anytime you want to start/stop profiling GPU times. You can monitor your current GPU Core Clock frequency by using NVAPI (see Appendix) or by using an external GPU monitoring tool such as GPU-Z.

Nvidia Gpu Boost 2.0

Using this standalone SetStablePowerState.exe application to lock the clocks before/after profiling GPU times makes it useless to ever call ID3D12Device::SetStablePowerState from a game engine directly. We actually recommend to never call this D3D12 method from engine code, especially for applications that have both D3D11 and D3D12 paths, to avoid any confusion when comparing GPU profiling results on D3D12 vs D3D11.

Gotchas

Using SetStablePowerState only modifies the GPU Core Clock frequency but does not modify the GPU Memory Clock frequency. So if an application gets a 1:1 between GPU Core Clock and GPU Memory Clock on a normal run, SetStablePowerState can modify it to up to 0.8 to 1. Nola bounce drum kit. That's an issue worth knowing as relative performance limiters will slightly shift. So when GPU Boost is disabled, a pass that is both math-throughput and memory-bandwidth limited may become more math limited; or, conversely, it may become relatively less memory limited.

Finally, for the SetStablePowerState call to succeed, you need to have the Windows 10 SDK installed. With Windows 10 up to Version 1511, that's all you need. But with more recent versions of Windows 10 (starting from the Anniversary Update), you also need to enable 'developer mode' in the OS settings, otherwise the call to SetStablePowerState will cause a D3D12 device removal.

Afterword: Some History and How Our Advice Evolved

If you have been following our DX12 Do's And Don'ts blog, you may have noticed that the advice on SetStablePowerState has changed. That could use some explanation…

In the first wave of DX12 games, we saw a couple of beta pre-releases that always called SetStablePowerState(TRUE) by default. As we discussed above, this API call significantly lowers the Core Clock frequency on NVIDIA GPUs and does not represent the end-user experience accurately. It is therefore quite inappropriate to call it by default in a shipping product, or even a beta.

We have also seen confusion result from the use of SetStablePowerState because it only works when the D3D12 debug layer is present on a system. We have seen multiple cases where development engineers and QA departments get different performance results because SetStablePowerState fails on some systems and the failure was quietly ignored.

Hence, our recommendation was to avoid SetStablePowerState or use it very thoughtfully and carefully.

For the Windows 10 Anniversary Update (aka Redstone), Microsoft changed the implementation, 'SetStablePowerState now requires developer mode be enabled; otherwise, device removal will now occur.' (http://forums.directxtech.com/index.php?topic=5734.new). So any calls to SetStablePowerState will obviously fail on end-users systems or most QA systems. This is a change for the better and makes much of our previous advice irrelevant.

We are still left with the question of whether or not to test with SetStablePowerState. Do you test with reduced performance and more stable results? Do you test end-user performance and accept some variability? Do you monitor clocks and show a warning when variability exceeds a threshold? To be perfectly honest, we have changed our minds more than once at NVIDIA DevTech. This is for good reasons because there is no one true answer. The answer depends on exactly what you are trying to achieve and what matters most to you. We have done all three. We have largely settled on stabilizing the clocks for our in-depth, precise analyses.

Appendix: SetStablePowerState.cpp

Appendix: Monitoring the GPU Core Clock using NVAPI

If you want to monitor your NVIDIA GPU Core Clock frequency without having to use an external tool, you can use the NvAPI_GPU_GetAllClockFrequencies function from NVAPI like in the example code below. We recommend to not call this function every frame, to avoid the risk of introducing any significant performance hit. Instead, we recommend calling it at the beginning and end of a given time interval (for instance before/after a GPU profiling session, or before/after playing a level), and display a warning if the GPU Core Clock frequency has changed during the considered time interval.